Implementing parallel loops using nested parallelism

Spawn is a subroutine that executes or run at the same time as that of parent. So by the

Spawning both parent and child work simultaneously. The main motive behind for evolving the concept of spawning is to achieve parallelism, which results increase the overall performance of computer.

Work is defined as total time required for completing the entire multithreaded computation on a single processor.

Span is defined as the maximum time required for completing the strands along any path in the directed acyclic graph (DAG). It is an expensive path which contains maximum number of strands.

Work is the running time of a computation on a single processor.

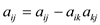

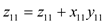

Consider it as .

.

Now suppose there are unlimited numbers of processors. Then the

span is denoted by

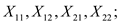

The ratio of  and

and ,

that is

,

that is  gives the

parallelism of the multithreaded computation.

gives the

parallelism of the multithreaded computation.

a.

Implementing parallel loop of SUM-ARRAY using nested parallelism (spawn and sync):

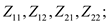

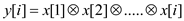

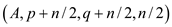

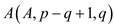

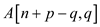

Consider the 2 arrays of size  say

say

and

and .

.

Suppose  is the third

array which stores the sum of the given two arrays. The

implementation of NESTED-SUM-ARRAYS algorithm is similar to

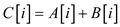

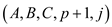

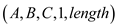

MAT-VEC-MAIN-LOOP. The NESTED-SUM-ARRAYS algorithm has three

arrays(A,B,C) and the range of arrays(i,j) as a parameter.

In the below algorithm i and j specify the range up

to which addition of two array perform. The implementation of

SUM-ARRAYS using nested Parallelism is as follow:

is the third

array which stores the sum of the given two arrays. The

implementation of NESTED-SUM-ARRAYS algorithm is similar to

MAT-VEC-MAIN-LOOP. The NESTED-SUM-ARRAYS algorithm has three

arrays(A,B,C) and the range of arrays(i,j) as a parameter.

In the below algorithm i and j specify the range up

to which addition of two array perform. The implementation of

SUM-ARRAYS using nested Parallelism is as follow:

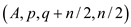

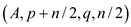

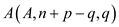

NESTED-SUM-ARRAYS

if

else

Spawn NESTED-SUM-ARRAYS

NESTED-SUM-ARRAYS

sync

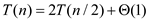

Analyzing the above modified algorithm:

Here three methods which are work, span and parallelism are used for analyzing the algorithm which is as follow:

Work:

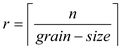

Consider the size of the array as , calculate

the sum by calling procedure having argument as follow,

, calculate

the sum by calling procedure having argument as follow,

NESTED-SUM-ARRAYS

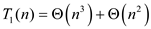

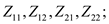

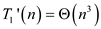

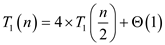

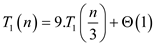

Work , can be

given by below recurrence

, can be

given by below recurrence

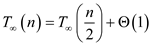

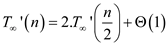

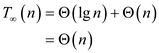

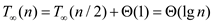

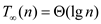

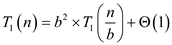

Applying Master theorem (In the analysis of the algorithm, master theorem gives the solution in asymptotic term for the recurrence relation),

.

.Span:

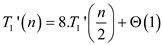

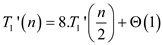

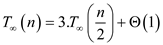

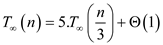

Span of the above procedure can be given by below recurrences,

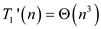

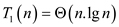

Applying master theorem (In the analysis of the algorithm, master theorem gives the solution in asymptotic term for the recurrence relation),

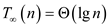

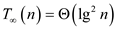

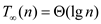

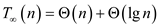

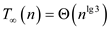

Hence span of NESTED-SUM-ARRAYS algorithm is

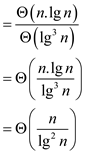

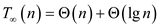

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

=

Hence span of NESTED-SUM-ARRAYS algorithm is

b.

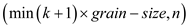

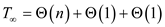

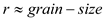

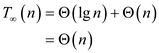

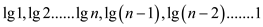

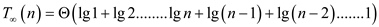

Consider .

.

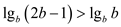

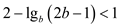

Since,

, therefore

, therefore

Since  varies from

0 to

varies from

0 to (for loop

line number 4 of the algorithm SUM-ARRAYS’ on 27-1 of the

textbook), therefore maximum value of

(for loop

line number 4 of the algorithm SUM-ARRAYS’ on 27-1 of the

textbook), therefore maximum value of  will

be

will

be .

.

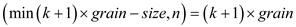

Now, (line number 5 of the algorithm SUM-ARRAYS’)

will have

value always less than or equal to n

will have

value always less than or equal to n

Therefore,

Now in each call of ADD-SUBARRAY procedure (problem 27-1 of the

textbook), it has for loop runs for 1 time and contributes a single

value to  therefore it

will take

therefore it

will take  time.

time.

Now since

SUM_ARRAYS’ runs

runs

times and each time it takes

times and each time it takes  time to

execute.

time to

execute.

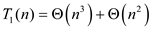

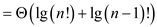

Therefore total work , will be

given by,

, will be

given by,

… … (1)

… … (1)

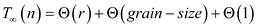

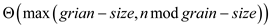

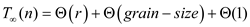

Span of the SUM_ARRAYS’ will be

equal to span of the single call to ADD-SUBARRAY plus constant time

work done in first 3 lines plus

will be

equal to span of the single call to ADD-SUBARRAY plus constant time

work done in first 3 lines plus  in order to

account the overhead of spawning

in order to

account the overhead of spawning  calls to

ADD-SUBARRAY.

calls to

ADD-SUBARRAY.

Therefore,

In this case

Therefore,

That is,

Parallelism=

=

=

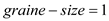

when

grain-size is 1.

when

grain-size is 1.c.

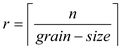

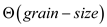

For a given  each

iteration of for loop of procedure SUM-ARRAYS’

each

iteration of for loop of procedure SUM-ARRAYS’  will result

in

will result

in  times

iteration of the ADD-SUBARRAY procedure accept for the last

iteration. Last iteration it will run for

times

iteration of the ADD-SUBARRAY procedure accept for the last

iteration. Last iteration it will run for  times.

times.

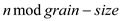

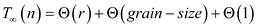

Therefore span of ADD-SUBARRAY will be,

=

=

Parallelism=

Since parallelism

Therefore, to achieve a maximum parallelism, span should be minimum.

.

.

That is,

should be

minimum.

should be

minimum.

Since,

Therefore  will be

minimum when,

will be

minimum when,

Therefore,

That is,

Saving temporary space in matrix multiplication

Spawn is a subroutine that executes or run at the same time as that of parent. So by the

Spawning both parent and child work simultaneously. The main motive behind for evolving the concept of spawning is to achieve parallelism, which results increase the overall performance of computer.

Work is defined as total time required for completing the entire multithreaded computation on a single processor.

Span is defined as the maximum time required for completing the strands along any path in the directed acyclic graph (DAG). It is an expensive path which contains maximum number of strands.

Work is the running time of a computation on a single processor.

Consider it as .

.

Now suppose there are unlimited numbers of processors. Then the

span is denoted by

The ratio of  and

and ,

that is

,

that is  gives the

parallelism of the multithreaded computation.

gives the

parallelism of the multithreaded computation.

a.

A recursive multithreaded algorithm that removes the essence

for the ephemeral matrix say  at

the cost of increasing the span to

at

the cost of increasing the span to

It can be done by computing  and

inserting sync at a particular location as mentioned in the

following algorithm,

and

inserting sync at a particular location as mentioned in the

following algorithm,

P-MATRIX-MULTIPLY

//Find number of rows in X array

//Use nested loop to initialize each element of Z matrix with 0 and then multiply

//X and Y matrix by calling another function

parallel for  to

to

parallel for  to

to

//Call function to multiply both matrix and store it simultaneously into

//another matrix

P-MATRIX-MULTIPLY-RECURSIVE

P-MATRIX-MULTIPLY-RECURSIVE

//Find number of rows in X array

//Check number of rows is 1 or not.

if

//multiply X and Y matrix and then store in Z matrix

else partition  ,

, and

and

into

into

sub-matrices

sub-matrices

Spawn P-MATRIX-MULTIPLY-RECURSIVE

spawn P-MATRIX-MULTIPLY-RECURSIVE

spawn P-MATRIX-MULTIPLY-RECURSIVE

P-MATRIX-MULTIPLY-RECURSIVE

sync

spawn P-MATRIX-MULTIPLY-RECURSIVE

spawn P-MATRIX-MULTIPLY-RECURSIVE

spawn P-MATRIX-MULTIPLY-RECURSIVE

P-MATRIX-MULTIPLY-RECURSIVE

sync

b.

Work:

The work of P-MATRIX-MULTIPLY procedure can be calculated by serializing the parallel for loop and calculating its running time and comparing it with procedure P-MATRIX-MULTIPLY-RECURSIVE.

Since both of these two procedures are executing one by one therefore the procedure having maximum work will be the total work done.

Work performed by doubly nested parallel for loop of

P-MATRIX-MULTIPLY algorithm is  … …

(1)

… …

(1)

Now there is another procedure P-MATRIX-MULTIPLY-RECURSIVE. Its

work  can be

defined by recurrence given below (consider 8 recursive

function),

can be

defined by recurrence given below (consider 8 recursive

function),

… … (2)

… … (2)

Applying master theorem (In the analysis of the algorithm, master theorem gives the solution in asymptotic term for the recurrence relation),

Therefore from (1) and (2) total work  will

be,

will

be,

… … (3)

… … (3)

Hence work of P-MATRIX-MULTIPLY algorithm is .

.

Span:

Span of the doubly nested parallel for loop of P-MATRIX-MULTIPLY

algorithm is … …

(4)

… …

(4)

P-MATRIX-MULTIPLY-RECURSIVE, there are two groups of spawned

recursive calls. Therefore, the span  of

P-MATRIX-MULTIPLY-RECURSIVE will be by the following

recurrence,

of

P-MATRIX-MULTIPLY-RECURSIVE will be by the following

recurrence,

Applying master theorem (In the analysis of the algorithm, master theorem gives the solution in asymptotic term for the recurrence relation),

… … (5)

… … (5)

Therefore total span  of the

above algorithm will be as following,

of the

above algorithm will be as following,

… … (6)

… … (6)

.

.c.

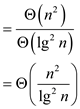

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

=

=

Parallelism for  matrices

will be,

matrices

will be,

=

Parallelism of P-MATRIX-MULTIPLY-RECURSIVE is .

.

Therefore the present algorithm that is P-MATRIX-MULTIPLY has 10 times less parallelism compared to P-MATRIX-MULTIPLY-RECURSIVE algorithm.

Multithreaded matrix algorithm

Spawn is a subroutine that executes or run at the same time as that of parent. So by the

Spawning both parent and child work simultaneously. The main motive behind for evolving the concept of spawning is to achieve parallelism, which results increase the overall performance of computer.

Work is defined as total time required for completing the entire multithreaded computation on a single processor.

Span is defined as the maximum time required for completing the strands along any path in the directed acyclic graph (DAG). It is an expensive path which contains maximum number of strands.

Work is the running time of a computation on a single processor.

Consider it as .

.

Now suppose there are unlimited numbers of processors. Then the

span is denoted by

The ratio of  and

and ,

that is

,

that is  gives the

parallelism of the multithreaded computation.

gives the

parallelism of the multithreaded computation.

a.

In order to make LU-DECOMPOSITION Algorithm parallel, replace ordinary ‘for’ loop with the Parallel ‘for’ loop so that all iteration of parallel ‘for’ loop run simultaneously. The Parallel LU-DECOMPOSITION Algorithm is as follow:

LU-DECOMPOSITION +

+

Let and

and

be

new

be

new  matrices

matrices

Initialize with

with

below the diagonal

below the diagonal

Initialize with

with

on

the diagonal and

on

the diagonal and  above the

diagonal

above the

diagonal

//Use parallel loop to initialise elements of A matrix with elements of U

//matrix that is with 0

parallel for  to

to

//initialize ukk element with the akk element

//use loop to traverse to all row.

parallel for  to

to

//Divide A matrix element with the diagonal element of U matrix

//use loop to traverse to all row and all column.

parallel for  to

to

parallel for  to

to

//Subtract L, U matrix product from A matrix.

//return L and U matrix

return  and

and

Analyzing the above modified algorithm:

Here three methods which are work, span and parallelism are used for analyzing the algorithm which is as follow:

Work:

Work of above algorithm can calculate by replacing parallel for loop with ordinary for loop and thus calculating its running time.

Since there are 3 nested for loop each running for  times

therefore, work

times

therefore, work  will be:

will be:

Span:

Span of the parallel for loop running for  will

be

will

be .

.

will be,

will be,

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

=

b.

In order to make LUP-DECOMPOSITION Algorithm parallel replace ordinary for loop with the Parallel for loop so that all iteration of parallel for loop run simultaneously.

The Parallel LUP-DECOMPOSITION Algorithm is as follow:

LUP-DECOMPOSITION

//find number of rows in A matrix

Let be a new

array

be a new

array

//initialize elements of array with 1 to n numbers

parallel for  to

to

//use loop to initialize elements of P matrix with 0.

parallel for  to

to

parallel for  to

to

//Check particular ith row and kth column element is

//greater than P matrix element or not

if

//store particular ith row and kth column of A matrix in

// P matrix

//check P is equal to 0 or not.

if

error “singular matrix”

//Exchange kth and k’th

element of  array

array

exchange with

with

//use loop exchange to exchange kth and k’th row of A matrix.

parallel for  to

to

exchange with

with

//use nested loop to traverse to all row and all column.

parallel for  to

to

//Divide ith row and kth column element of A matrix

//with the ith row and kth column element of A matrix

parallel for  to

to

Analyzing the above modified algorithm:

Here three methods which are work, span and parallelism are used for analyzing the algorithm which is as follow:

Work:

Work of above algorithm can calculate by replacing parallel for loop with ordinary for loop and thus calculating its running time.

Since there are 3 nested for loop each running for  times

therefore, work

times

therefore, work  will be:

will be:

Span:

Span of the parallel for loop running for  will be

will be

.

.

will be:

will be:

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

c.

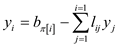

In order to make LUP-SOLVE Algorithm parallel replace ordinary for loop with the Parallel for loop so that all iteration of parallel for loop runs simultaneously. The Parallel LUP-SOLVE Algorithm is as follow:

LUP-SOLVE

//Find number of row in L matrix

let be a new

vector of length

be a new

vector of length

//use loop to solve yusing forward substitution

parallel for  to

to

//use loop to solve xusing backward substitution

parallel for  down

to

down

to

return x

Analyzing the above modified algorithm:

Here three methods which are work, span and parallelism are used for analyzing the algorithm which is as follow:

Work:

Work of above algorithm can calculate by replacing parallel for loop with ordinary for loop and thus calculating its running time.

Since there are 3 nested for loop each running for  times

therefore, work

times

therefore, work  will be:

will be:

Span:

Two parallel for loop used in the above LUP-SOLVEalgorithm hence Span of the above algorithm is

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

d.

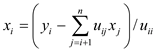

Consider the equation (28.11), (28.12) and (28.13) on section 28.2 of the textbook,

Consider the procedure and recurrence relation on section 28.2 of the textbook,

Since

Work and Span:

So works measure as taking every inverse matrix expression in series manner means with-out applying any parallel concept. This concept used in span measurement,

These values come when multiplication not taking a constant time but addition does.

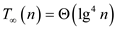

Inverse of a matrix takes time of polynomial of power two in work but in span it takes as a logarithm of power two and in last multiplication process increase the power from 2 to 4.

So,Work becomes

Span becomes:

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

Saving temporary space in matrix multiplication

Spawn is a subroutine that executes or run at the same time as that of parent. So by the

Spawning both parent and child work simultaneously. The main motive behind for evolving the concept of spawning is to achieve parallelism, which results increase the overall performance of computer.

Work is defined as total time required for completing the entire multithreaded computation on a single processor.

Span is defined as the maximum time required for completing the strands along any path in the directed acyclic graph (DAG). It is an expensive path which contains maximum number of strands.

Work is the running time of a computation on a single processor.

Consider it as .

.

Now suppose there are unlimited numbers of processors. Then the

span is denoted by

The ratio of  and

and ,

that is

,

that is  gives the

parallelism of the multithreaded computation.

gives the

parallelism of the multithreaded computation.

a.

A recursive multithreaded algorithm that removes the essence

for the ephemeral matrix say  at

the cost of increasing the span to

at

the cost of increasing the span to

It can be done by computing  and

inserting sync at a location as mentioned in the following

algorithm,

and

inserting sync at a location as mentioned in the following

algorithm,

P-MATRIX-MULTIPLY

//Find number of rows in X array

//Use nested loop to initialize each element of Z matrix with 0 and then multiply

//X and Y matrix by calling another function

parallel for  to

to

parallel for  to

to

//Call function to multiply both matrix and store it simultaneously into

//another matrix

P-MATRIX-MULTIPLY-RECURSIVE

P-MATRIX-MULTIPLY-RECURSIVE

//Find number of rows in X array

//Check number of rows is 1 or not.

if

//multiply X and Y matrix and then store in Z matrix

else partition  ,

, and

and

into

into

sub-matrices

sub-matrices

Spawn P-MATRIX-MULTIPLY-RECURSIVE

spawn P-MATRIX-MULTIPLY-RECURSIVE

spawn P-MATRIX-MULTIPLY-RECURSIVE

P-MATRIX-MULTIPLY-RECURSIVE

sync

spawn P-MATRIX-MULTIPLY-RECURSIVE

spawn P-MATRIX-MULTIPLY-RECURSIVE

spawn P-MATRIX-MULTIPLY-RECURSIVE

P-MATRIX-MULTIPLY-RECURSIVE

sync

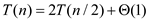

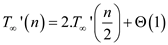

The algorithm takes  time by

the following recurrence relation:

time by

the following recurrence relation:

and

and

The span is

b.

Work:

The work of P-MATRIX-MULTIPLY procedure can be calculated by serializing the parallel for loop and calculating its running time and comparing it with procedure P-MATRIX-MULTIPLY-RECURSIVE.

Since both of these two procedures are executing one by one therefore the procedure having maximum work will be the total work done.

Work performed by doubly nested parallel for loop of

P-MATRIX-MULTIPLY algorithm is  … …

(1)

… …

(1)

Now there is another procedure P-MATRIX-MULTIPLY-RECURSIVE. Its

work  can be

defined by recurrence given below (consider 8 recursive

function),

can be

defined by recurrence given below (consider 8 recursive

function),

… … (2)

… … (2)

Therefore from (1) and (2) total work  will

be,

will

be,

… … (3)

… … (3)

Hence work of P-MATRIX-MULTIPLY algorithm is .

.

Span:

Span of the doubly nested parallel for loop of P-MATRIX-MULTIPLY

algorithm is … …

(4)

… …

(4)

P-MATRIX-MULTIPLY-RECURSIVE, there are two groups of spawned

recursive calls. Therefore, the span  of

P-MATRIX-MULTIPLY-RECURSIVE will be by the following

recurrence,

of

P-MATRIX-MULTIPLY-RECURSIVE will be by the following

recurrence,

Applying master theorem (In the analysis of the algorithm, master theorem gives the solution in asymptotic term for the recurrence relation),

… … (5)

… … (5)

Therefore, total span  of the

above algorithm will be as following,

of the

above algorithm will be as following,

… … (6)

… … (6)

Hence work of P-MATRIX-MULTIPLY algorithm is .

.

c.

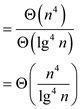

Parallelism:

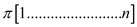

The correctness can be proved by performing induction on the recursive calls that are made to P-SCAN-2-AUX. On a single call, n is set to 1 and the algorithm sets y [1] = x [1] which is correct. The first half of the array has elements which are computed accurately because fewer recursive calls are required. The y[i] can be computed as follows:

The above term is computed with P-SCAN-2-AUX and the time taken

is T(n) =  . The span

is

. The span

is  and the parallelism is

and the parallelism is

d.

Fill the line 8 of P-SCAN-UP by  and lines 5

and 6 of P-SCAN-DOWN by v and

and lines 5

and 6 of P-SCAN-DOWN by v and

e.

The P-SCAN-UP satisfies the recurrence  =

=  . The

running time of P-SCAN-DOWN is also

. The

running time of P-SCAN-DOWN is also  . The

P-SCAN-3 also satisfies T(n) =

. The

P-SCAN-3 also satisfies T(n) =  .

.

The span of P-SCAN-UP and P-SCAN-DOWN is  .

.

Thus, the span of P-SCAN-3 is  . The

parallelism is

. The

parallelism is

Multithreading a simple stencil calculation

Spawn is a subroutine that executes or run at the same time as that of parent. So by the

Spawning both parent and child work simultaneously. The main motive behind for evolving the concept of spawning is to achieve parallelism, which results increase the overall performance of computer.

Work is defined as total time required for completing the entire multithreaded computation on a single processor.

Span is defined as the maximum time required for completing the strands along any path in the directed acyclic graph (DAG). It is an expensive path which contains maximum number of strands.

Work is the running time of a computation on a single processor.

Consider it as .

.

Now suppose there are unlimited numbers of processors. Then the

span is denoted by

The ratio of  and

and ,

that is

,

that is  gives the

parallelism of the multithreaded computation.

gives the

parallelism of the multithreaded computation.

a.

For simplicity assume that  is an exact

power of 2.

is an exact

power of 2.

Divide matrix into

four

matrix into

four  sub

matrices.

sub

matrices.

Assuming that BASE-CASE is given to us,

SIMPLE-STENCIL

//check length of array is 1.

if

=BASE-CASE

=BASE-CASE

else

// Calculate sub-matrix

SIMPLE-STENCIL

// Calculate sub-matrices A12and A21in parallel.

Spawn SIMPLE-STENCIL

SIMPLE-STENCIL

sync

// Calculate sub-matrix

SIMPLE-STENCIL

Analyzing the algorithm:

Here three methods which are work, span and parallelism are used for analyzing the algorithm which is as follow:

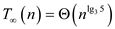

Work:

Work  of

SIMPLE-STENCIL can be calculating by computing the running time of

its serialization.

of

SIMPLE-STENCIL can be calculating by computing the running time of

its serialization.

That can be given by following recurrences,

Applying master theorem (In the analysis of the algorithm, master theorem gives the solution in asymptotic term for the recurrence relation),

Span:

Since one of the SIMPLE-STENCIL calls is spawned therefore, Span can be given by following recurrences,

Applying master theorem (In the analysis of the algorithm, master theorem gives the solution in asymptotic term for the recurrence relation),

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

b.

Modification in above algorithm,

P-STENCIL-MOD

//check length of array is 1.

if

BASE-CASE

BASE-CASE

else

//Group1: compute sub-matrix

P-STENCIL-MOD

//Group2:compute sub-matrices  and

and

in

parallel

in

parallel

Spawn P-STENCIL-MOD

P-STENCIL-MOD

sync

//Group3:compute sub-matrices  ,

,  , and

, and

Spawn P-STENCIL-MOD

Spawn P-STENCIL-MOD

P-STENCIL-MOD

sync

//Group4:compute sub-matrices  and

and

Spawn P-STENCIL-MOD

P-STENCIL-MOD

sync

//Group5:compute sub-matrix

P-STENCIL-MOD

It is quite easy to see that  matrices

have been divided into nine

matrices

have been divided into nine  matrices.

matrices.

In above figure same group specifies that they can be computed parallel.

Analyzing the above modified algorithm:

Here three methods which are work, span and parallelism are used for analyzing the algorithm which is as follow:

Work:

Work of the above algorithm can be calculated by computing the running time of its serialization.

That can be given by following recurrences,

Applying master theorem (In the analysis of the algorithm, master theorem gives the solution in asymptotic term for the recurrence relation),

Span:

It is quite obvious that span can be given by follow recurrences,

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

=

c.

As per question divide  matrices in

matrices in

,

,

matrices.

matrices.

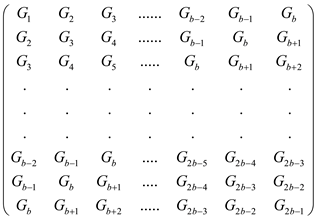

Generalizing the X we can get the following matrices for above configuration,

Hence the  sub-problems can be solved into 2b-1 groups.

sub-problems can be solved into 2b-1 groups.

Analyzing the above algorithm:

Here three methods which are work, span and parallelism are used for analyzing the algorithm which is as follow:

Work:

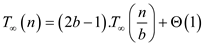

Work can be given by following recurrences,

Span:

From above span can be given by following recurrences,

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

=

=

=

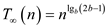

To prove that parallelism is  for any

choice of

for any

choice of  it is

necessary to prove that exponent of

it is

necessary to prove that exponent of  in the

parallelism is strictly less than 1 for any choice of

in the

parallelism is strictly less than 1 for any choice of .

.

,

,

Therefore,

Hence,

Therefore,

=

Where,

Hence proved

d .

Pseudocode for a multithreaded algorithm,

Parallel-STENCIL

//find number of rows in A array.

//Calculate all entries on the anti-diagonal and above it

for  to

to

parallel for  to

to

=BASE-CASE

=BASE-CASE

//Calculate all entries below the anti-diagonal

for  to

to

parallel for  to

to

=BASE-CASE

=BASE-CASE

Analyzing the above modified algorithm:

Here three methods which are work, span and parallelism are used for analyzing the algorithm which is as follow:

Work:

Work of the above algorithm can calculate by replacing the parallel for loop with ordinary for loop and thencomputing its running time.

Since both nested for loop are in series therefore total running time will be some of those,

Since both of the nested for loop runs for ,

,

Therefore work  of above

algorithm will be as follow,

of above

algorithm will be as follow,

Span:

Span of a parallel for loop having  iteration is

given by

iteration is

given by .

.

Hence span , of above

algorithm having iterations as,

, of above

algorithm having iterations as,

will be

given by

will be

given by

Parallelism:

Parallelism is the ratio of work by span that is ratio of

by

by .

.

Therefore,

Parallelism=

Randomized multithreaded algorithm:

Spawn:

• It is a subroutine that executes or runs at the same time as that of its parent.

• By spawning, both parent and child work simultaneously.

• The main motive behind spawning is to achieve parallelism, which results in increasing the overall performance of the computer.

Work:

• It is defined as the total time required for completing the entire multithreaded computation on a single processor.

Span:

• It is defined as the maximum time required for completing the strands along any path in the directed acyclic graph (DAG).

• It is an expensive path which contains maximum number of strands.

• Work is the running time of a computation on a single

processor. Consider it as .

.

• Suppose there are unlimited numbers of processors. Then the

span is denoted by

• The ratio of  and

and ,

that is,

,

that is,  gives the

parallelism of the multithreaded computation.

gives the

parallelism of the multithreaded computation.

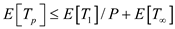

a.

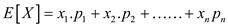

is an

expectation value associated with a randomized algorithm.

is an

expectation value associated with a randomized algorithm.

It is defined as  ,

where

,

where is a random

number generated by randomized algorithm and

is a random

number generated by randomized algorithm and  is its

probability of getting generated.

is its

probability of getting generated.

Since is the

probability associated with

is the

probability associated with  th

randomized outcome, its summation will be 1.

th

randomized outcome, its summation will be 1.

That is,

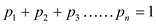

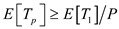

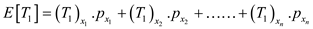

Work law  can be

defined as

can be

defined as  .

.

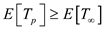

Span law  can be

defined as

can be

defined as .

.

Greedy scheduler bound  can be

defined as

can be

defined as .

.

b.

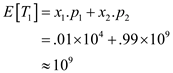

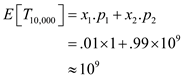

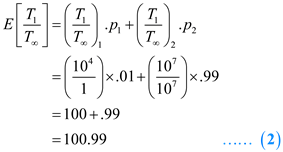

Consider the following data:

Randomized multithreaded algorithm for which 1% of the time is,

Randomized multithreaded algorithm for which 99% of the time is,

Now,

Speed up:

Speed up:

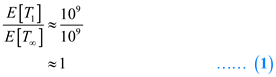

It is obvious from (1) and (2) that speedup of

multithreaded algorithm should be defined as  ¸ rather

than

¸ rather

than .

.

Parallelism of a randomized multithreaded algorithm should be

defined as the ratio .

.

is defined

as

is defined

as

Here,  is the work

of the

is the work

of the  th

outcome and

th

outcome and  is

probability of that outcome.

is

probability of that outcome.

Thus,  is an

expected value of the work for a long range of outcomes. In other

words, it is the average work expected.

is an

expected value of the work for a long range of outcomes. In other

words, it is the average work expected.

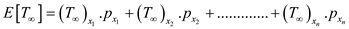

is defined

as

is defined

as

Here,  is the SPAN

of the

is the SPAN

of the  th

outcome and

th

outcome and  is

probability of that outcome.

is

probability of that outcome.

Thus,  is an

expected value of the span for a long range of outcomes. In other

words, it is the average span expected.

is an

expected value of the span for a long range of outcomes. In other

words, it is the average span expected.

Since parallelism of an algorithm should be approximately equal to an average case scenario, therefore, the parallelism should be the average case of all outcomes.

Hence, parallelism should be defined as .

.

d.

Use nested parallelism to make Randomized-Quick-sort algorithm on section 7.3 of the textbook as a multithreaded algorithm.

In following algorithm, p and r represent the range of the array.

P-RANDOMIZED-QUICKSORT

//Check range of array is valid or not.

if

//call function to divide the array into two halves

RANDOMIZED-PARTITION

RANDOMIZED-PARTITION

spawn P-RANDOMIZED-QUICKSORT

P-RANDOMIZED-QUICKSORT

//Function to divide the array

RANDOMIZED-PARTITION

// Function to choose a random value between  and

and

including

boundaries.

including

boundaries.

if  RANDOM

RANDOM

exchange with

with

// partitioning the array (PARTITION ) on the

section 7.1 of the textbook

) on the

section 7.1 of the textbook

return PARTITION

e.

In above algorithm, at each level of recursion, the partition is done by RANDOMIZED-PARTITION which places a constant fraction of the elements on one side of the partition.

The recursion tree has depth of  and work

done at each level is

and work

done at each level is .

.

Therefore, total work .

.

The recursion tree has depth of  and parallel

time at taken each level is

and parallel

time at taken each level is  .

.

Therefore, total span is given as

shown below:

is given as

shown below:

Parallelism is the ratio of work by span, that is, ratio of

by

by .

.

Therefore, parallelism